History

This company was founded after the Covid-19 endemic was over, this company was built from a piece of paper from the dreams of a young boy named Sean Anthony, who gave smart and creative ideas to make products with new innovations, which made this company formed into a unified whole. dreams to achieve.

Vision

" It doesn't matter your logic is limited, because your imagination is unlimited."

Mission

"Remake our world with something new and unique way, to birth a newborn of dreams and imagination from human being, that make all our creativity and skills will be in use for the better future."

Product & Service

We are branding of technology and science combined, to make an AI that with help to build and make a better future for all humanity.

We will give all of you the programs that can be modified, so all of you can get to customize an AI according to your wishes, and we will develop them for you, so they have safety regulations and procedure to follow.

Privacy & Policy

The regulatory proposal aims to provide AI developers, deployers and users with clear requirements and obligations regarding specific uses of AI. At the same time, the proposal seeks to reduce administrative and financial burdens for business, in particular small and medium-sized enterprises (SMEs).

The proposal is part of a wider AI package, which also includes the updated Coordinated Plan on AI. Together, the Regulatory framework and Coordinated Plan will guarantee the safety and fundamental rights of people and businesses when it comes to AI. And, they will strengthen uptake, investment and innovation in AI across the IDN.

Why do we need rules on AI?

The proposed AI regulation ensures that Indonesians can trust what AI has to offer. While most AI systems pose limited to no risk and can contribute to solving many societal challenges, certain AI systems create risks that we must address to avoid undesirable outcomes.

For example, it is often not possible to find out why an AI system has made a decision or prediction and taken a particular action. So, it may become difficult to assess whether someone has been unfairly disadvantaged, such as in a hiring decision or in an application for a public benefit scheme.

Although existing legislation provides some protection, it is insufficient to address the specific challenges AI systems may bring.

The proposed rules will:

- address risks specifically created by AI applications;

- propose a list of high-risk applications;

- set clear requirements for AI systems for high risk applications;

- define specific obligations for AI users and providers of high risk applications;

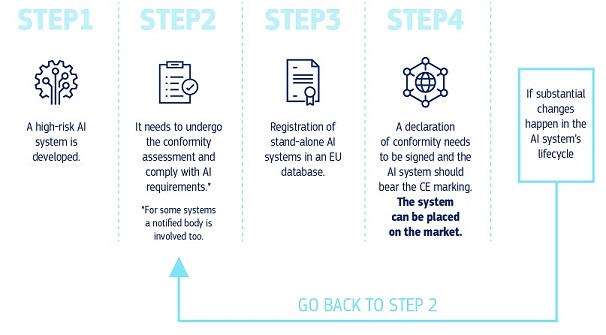

- propose a conformity assessment before the AI system is put into service or placed on the market;

- propose enforcement after such an AI system is placed in the market;

- propose a governance structure at Indonesians and national level.

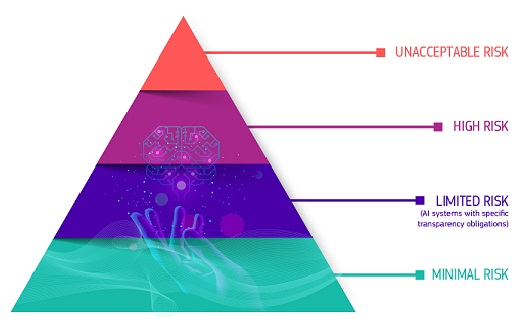

A risk-based approach

The Regulatory Framework defines 4 levels of risk in AI:

- Unacceptable risk

- High risk

- Limited risk

- Minimal or no risk

Unacceptable risk

All AI systems considered a clear threat to the safety, livelihoods and rights of people will be banned, from social scoring by governments to toys using voice assistance that encourages dangerous behaviour.

High risk

AI systems identified as high-risk include AI technology used in:

- critical infrastructures (e.g. transport), that could put the life and health of citizens at risk;

- educational or vocational training, that may determine the access to education and professional course of someone’s life (e.g. scoring of exams);

- safety components of products (e.g. AI application in robot-assisted surgery);

- employment, management of workers and access to self-employment (e.g. CV-sorting software for recruitment procedures);

- essential private and public services (e.g. credit scoring denying citizens opportunity to obtain a loan);

- law enforcement that may interfere with people’s fundamental rights (e.g. evaluation of the reliability of evidence);

- migration, asylum and border control management (e.g. verification of authenticity of travel documents);

- administration of justice and democratic processes (e.g. applying the law to a concrete set of facts).

High-risk AI systems will be subject to strict obligations before they can be put on the market:

- adequate risk assessment and mitigation systems;

- high quality of the datasets feeding the system to minimise risks and discriminatory outcomes;

- logging of activity to ensure traceability of results;

- detailed documentation providing all information necessary on the system and its purpose for authorities to assess its compliance;

- clear and adequate information to the user;

- appropriate human oversight measures to minimise risk;

- high level of robustness, security and accuracy.

All remote biometric identification systems are considered high risk and subject to strict requirements. The use of remote biometric identification in publicly accessible spaces for law enforcement purposes is, in principle, prohibited.

Narrow exceptions are strictly defined and regulated, such assuch as when necessary to search for a missing child, to prevent a specific and imminent terrorist threat or to detect, locate, identify or prosecute a perpetrator or suspect of a serious criminal offence.

Such use is subject to authorization by a judicial or other independent body and to appropriate limits in time, geographic reach and the data bases searched.

Limited risk

Limited risk refers to AI systems with specific transparency obligations. When using AI systems such as chatbots, users should be aware that they are interacting with a machine so they can take an informed decision to continue or step back.

Minimal or no risk

The proposal allows the free use of minimal-risk AI. This includes applications such as AI-enabled video games or spam filters. The vast majority of AI systems currently used in the IDN fall into this category.

Once an AI system is on the market, authorities are in charge of market surveillance, users ensure human oversight and monitoring, and providers have a post-market monitoring system in place. Providers and users will also report serious incidents and malfunctioning.

Future-proof legislation

As AI is a fast evolving technology, the proposal has a future-proof approach, allowing rules to adapt to technological change. AI applications should remain trustworthy even after they have been placed on the market. This requires ongoing quality and risk management by providers.

0 Comments